Healthcare AI is advancing faster than ever. Hospitals now rely on AI agents to assist doctors manage patient data speed up approvals and improve care outcomes. But as adoption grows so do the risks. When AI systems fail to follow medical laws or data protection standards patients and organizations suffer. In one widely discussed case a hospital was forced to shut down an AI system after patient records were shared without proper consent. Events like this show why compliance is no longer optional in healthcare AI.

Ensuring compliance in healthcare focused AI agent development is essential for patient safety data protection and long term trust. This article explains how compliant healthcare AI works what developers and hospitals must focus on and why responsible AI development is shaping the future of medicine.

Why Healthcare AI Compliance Matters Right Now

Healthcare regulators and patients are paying closer attention to how AI systems handle sensitive medical data. As AI becomes part of daily clinical workflows any mistake can directly affect lives. Compliance ensures that AI systems respect privacy remain transparent and operate within medical laws.

For Google Discover readers this topic matters because it connects technology with real world impact. Compliance protects patients supports doctors and prevents costly failures that damage trust in digital healthcare.

How AI Is Used in Modern Healthcare

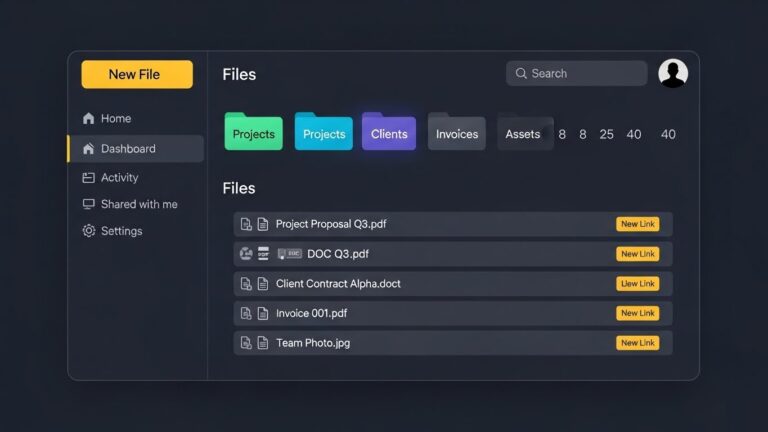

AI supports healthcare across diagnosis administration and patient management. It helps doctors analyze medical images interpret lab results and detect risks earlier. AI agents also manage administrative tasks like prior authorization by reviewing treatment plans and insurance rules.

Before AI these processes took days and required manual effort. Today compliant AI systems complete them faster while maintaining accuracy and security. AI does not replace doctors. It strengthens their ability to make informed decisions.

Understanding Compliance in Healthcare AI

Compliance in healthcare AI means following medical laws ethical standards and data protection rules that exist to protect patients. These rules define how data is collected stored processed and shared.

Key regulations include HIPAA in the United States GDPR in Europe and FDA guidelines for medical software. Compliant AI systems must explain how decisions are made protect patient data and ensure fairness. Compliance begins during design and continues throughout deployment updates and daily use.

Main Principles of AI Compliance in Healthcare

To build a compliant AI system, you need to include safety and privacy from the very beginning. These steps make sure that AI systems stay transparent and follow healthcare laws at every level.

Some key principles include:

- Keeping all patient data encrypted during use and storage

- Getting permission before using any medical data

- Giving access only to approved team members

- Recording all actions for accountability

AI in Healthcare Diagnostics and Decision Support

AI plays a growing role in diagnostics by supporting doctors with scans imaging and test results. It can identify patterns that are difficult to detect and support earlier diagnosis.

However diagnostic AI must be explainable. Doctors need to understand why a system produces a result. Compliant AI relies on verified medical data avoids bias and allows human review. AI should support clinical judgment not replace it.

Prior Authorization and Compliance in AI Systems

Prior authorization remains one of the most time consuming parts of healthcare administration. AI agents now help automate this process by analyzing patient records and insurance requirements.

Compliance is critical in these systems. AI must only access approved data protect patient privacy and maintain detailed records. When developed responsibly AI reduces delays without compromising patient rights.

Benefits of Compliant AI in Healthcare

The main strength of AI in healthcare does not come only from advanced technology. It comes from compliance and responsibility, which make these systems safe, transparent, and fair for everyone. AI offers several real benefits when developed and used responsibly:

- It reduces paperwork and saves valuable time for healthcare workers

- It improves the accuracy of reports and medical records

- It helps doctors make faster and more informed decisions

- It minimizes delays for patients and ensures better use of hospital resources

- It can detect health risks early by analyzing medical images, lab results, or genetic data

- It supports preventive care by warning doctors before a disease becomes serious

- It improves coordination between hospital departments and reduces record errors

- It increases patient satisfaction through smoother and more reliable care.

Ethical Responsibilities in Healthcare AI

Ethics guide how AI should be used in healthcare. Systems must respect patient dignity privacy and equality. Bias must be actively monitored and human oversight must remain central.

Patients should understand how their data is used and retain control over consent. Ethical AI development strengthens public trust and supports long term adoption.

Healthcare AI Growth and Industry Trends

Healthcare AI adoption continues to rise worldwide. Many healthcare leaders report improved decision making and efficiency after implementing AI tools. At the same time data protection and transparency have become top priorities.

These trends show that compliance is not slowing innovation. It is enabling healthcare AI to scale safely and responsibly.

AI in Healthcare Statistics

Recent research shows how fast AI is growing in medicine.These numbers show that compliance is not an obstacle. It is what keeps healthcare AI useful and safe.

- Around 70 percent of healthcare leaders say AI has improved decision making

- More than half of hospitals plan to expand their AI projects in the next few years

- The biggest challenge remains keeping patient data safe and transparent

What the Future Holds for Healthcare AI

The future of healthcare depends on AI systems that are secure transparent and compliant. Trust will determine which technologies succeed.

In the coming years healthcare AI will focus on explainable models stronger consent frameworks and stricter data protection. Innovation will continue but only within ethical and legal boundaries.

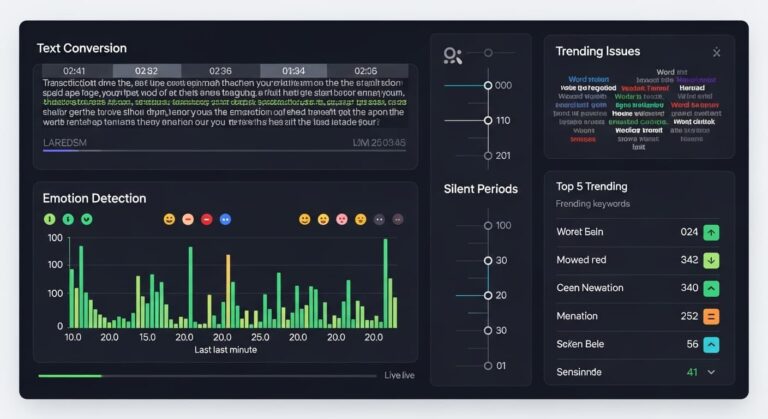

Documentation and Continuous Monitoring

Compliance in healthcare AI does not stop once a system goes live. In reality this is where the most important work begins. Healthcare AI systems continue to learn adapt and interact with sensitive patient data long after deployment. Without proper documentation and monitoring even a well designed system can slowly drift out of compliance.

Documentation should clearly record where training data comes from how patient consent is obtained how decisions are generated and how updates are applied. Every change to the model whether it is a data update performance tweak or feature addition should be logged. This creates a clear audit trail that regulators hospitals and internal teams can review when questions arise.

Continuous monitoring focuses on how the AI behaves in real clinical environments. Developers and healthcare organizations should regularly check for data bias performance drops security vulnerabilities and unexpected outputs. Automated alerts can flag unusual access patterns or decision anomalies before they cause harm.

Regular audits security testing and bias evaluations ensure the system remains accurate lawful and fair. In healthcare ongoing monitoring is similar to patient follow ups. Continuous observation prevents small issues from becoming serious risks.

Collaboration Between Technology and Healthcare Experts

Compliant healthcare AI cannot be built in isolation. Technical expertise alone is not enough in a medical environment where decisions affect human lives. Successful AI systems are created through close collaboration between technology teams and healthcare professionals.

Doctors and clinicians help ensure that AI outputs make medical sense and fit real clinical workflows. Legal and compliance experts interpret healthcare regulations and translate them into system requirements. Privacy specialists guide how sensitive data should be stored shared and protected. Developers then turn these insights into reliable and secure AI systems.

Ongoing collaboration is just as important after deployment. Feedback from doctors and hospital staff helps identify practical issues that technical teams may not see. This shared responsibility ensures the AI system stays compliant useful and aligned with medical ethics over time.

Conclusion

Ensuring compliance in healthcare focused AI agent development is not just about following regulations. It is about protecting patients preserving trust and making sure technology improves care rather than creating new risks.

Responsible healthcare AI systems balance innovation with accountability. They combine accuracy strong data protection transparency and human oversight. When compliance and technology move forward together AI becomes a trusted long term partner in modern healthcare rather than a liability.

FAQs

How does compliance improve patient trust in healthcare AI?

When patients know that an AI system follows legal and ethical standards, they are more comfortable allowing it to access their data. Compliance gives them assurance that their information will not be misused and that the system’s decisions are fair and safe. This trust is the foundation of digital healthcare.

What steps can hospitals take to maintain AI compliance after installation?

Hospitals should create clear policies for data handling, review software performance regularly, and train staff to manage AI responsibly. They should also assign a compliance officer who checks whether the system meets privacy laws and ethical guidelines every few months.

How can explainable AI models help doctors make better decisions?

Explainable AI shows how it reaches a conclusion instead of just giving results. When doctors understand the reasoning, they can compare it with their own medical knowledge. This combination of human expertise and AI accuracy leads to safer and more reliable healthcare outcomes.

What are the risks if an AI system is not compliant in healthcare?

Non compliant AI can expose sensitive data, make biased decisions, or even violate medical laws. This can lead to legal penalties, loss of reputation, and harm to patients. It is always better to invest in compliance early than to face these problems later.

How can small healthcare organizations manage AI compliance with limited resources?

They can start with basic steps such as encrypting all patient data, using approved AI software, and documenting every action the system takes. Consulting with external experts or using ready made compliance frameworks can also help smaller teams stay secure and legal without heavy costs.

How can developers ensure that healthcare AI remains compliant during updates?

Every time an AI system is updated, developers must test it again for privacy, accuracy, and fairness. They should keep a detailed record of what changed, recheck all permissions, and verify that the update does not affect compliance rules. Using version control, audit logs, and regular security reviews helps maintain continuous compliance throughout the AI’s life cycle.

3 Comments